category

对于大多数商业应用程序来说,基本的人工智能聊天是不够的。机构需要能够从数据库中提取信息、与现有工具集成、处理多步骤流程并独立做出决策的人工智能。

这篇文章演示了如何使用Strands agents快速构建复杂的人工智能代理,使用Amazon Bedrock AgentCore可靠地扩展它们,并通过LibreChat熟悉的界面访问它们,以推动整个机构的用户立即采用。

基本AI聊天界面的挑战

尽管基本的人工智能聊天界面可以回答问题并生成内容,但教育机构需要简单聊天无法提供的功能:

情境决策——一个问“我应该修什么课程?”的学生需要一个可以访问他们的成绩单、检查先决条件、验证毕业要求并考虑日程冲突的代理,而不仅仅是通用的课程描述

多步骤工作流程——学位规划需要分析当前进度,确定剩余要求,建议课程顺序,并在学生做出决定时更新建议

机构数据集成——有效的教育人工智能必须连接到学生信息系统、学习管理服务、学术数据库和机构存储库,以提供相关的个性化指导

持久记忆和学习——代理人需要记住之前与学生的互动,跟踪他们在学期内的学术旅程,并建立对个人学习模式和需求的理解

将开源灵活性与企业基础架构相结合

本文介绍的集成展示了三种技术如何协同工作以应对这些挑战:

Strands Agents–只需几行代码即可构建复杂的多代理工作流

Amazon Bedrock AgentCore–通过无服务器、按使用付费的部署可靠地扩展代理

LibreChat–为用户提供熟悉的聊天界面,推动即时采用

Strands代理概述

Strands Agents是一个开源SDK,它采用模型驱动的方法,只需几行代码即可构建和运行AI代理。与LibreChat的简单代理实现不同,Strands支持复杂的模式,包括通过工作流、图形和群工具进行多代理编排;管理数千个工具的语义搜索;以及具有深度分析思维周期的高级推理能力。该框架通过采用最先进的模型进行规划、链式思维、调用工具和反映的能力简化了代理开发,同时通过灵活的架构和全面的可观察性从本地开发扩展到生产部署。

亚马逊Bedrock 代理核心概述

Amazon Bedrock AgentCore是一套全面的企业级服务,可帮助开发人员使用您选择的框架和模型快速安全地大规模部署和操作AI代理,这些框架和模型托管在亚马逊Bedrock或其他地方。这些服务是可组合的,可以与流行的开源框架和许多模型一起使用,因此您不必在开源灵活性和企业级安全性和可靠性之间做出选择。

Amazon Bedrock AgentCore包括可以一起或独立使用的模块化服务:运行时(用于部署和扩展动态代理的安全、无服务器运行时)、网关(将API和AWS Lambda函数转换为与代理兼容的工具)、内存(管理短期和长期内存)、身份(提供安全访问管理)和观察性(提供对代理性能的实时可见性)。

此集成中使用的关键Amazon Bedrock AgentCore服务是Amazon Bedrock AgentCore Runtime,这是一个安全的无服务器运行时,专门用于使用包括LangGraph、CrewAI和Strands agents在内的开源框架部署和扩展动态AI代理和工具;协议;以及您选择的模型。Amazon Bedrock AgentCore Runtime旨在为代理工作负载提供业界领先的扩展运行时支持、快速冷启动、真正的会话隔离、内置身份以及对多模式有效载荷的支持。与功能启动、执行和立即终止的典型无服务器模型不同,Amazon Bedrock AgentCore Runtime提供了可以持续长达8小时的专用微型虚拟机,实现了复杂的多步骤代理工作流,其中每个后续调用都建立在同一会话中先前交互的累积上下文和状态之上。

LibreChat概述

LibreChat已成为商业人工智能聊天界面的领先开源替代品,为教育机构大规模部署会话式人工智能提供了强大的解决方案。LibreChat在构建时考虑了灵活性和可扩展性,为高等教育提供了几个关键优势:

多模型支持-LibreChat支持与多个AI提供商集成,因此机构可以为不同的用例选择最合适的模型,同时避免供应商锁定

用户管理——强大的身份验证和授权系统帮助机构通过适当的权限和使用控制来管理学生群体、教师和工作人员的访问

对话管理——学生和教师可以将他们的人工智能互动组织成项目和主题,创造一个更有条理的学习环境

可定制的界面——该解决方案可以进行品牌化和定制,以匹配机构身份和特定的教学需求

集成优势

将Strands Agents与Amazon Bedrock AgentCore和LibreChat集成,可以创造独特的优势,将这两项服务的功能扩展到远远超出任何一项独立实现的范围:

- 通过熟悉的界面实现无缝代理体验——LibreChat直观的聊天界面成为复杂代理工作流程的门户。用户可以通过自然对话触发复杂的多步骤流程、数据分析和外部系统集成,而无需学习新的接口或复杂的API。

- 动态代理加载和管理——与静态AI聊天实现不同,这种集成支持动态代理加载与访问管理。新的代理应用程序可以单独部署,无需LibreChat更新或停机即可供用户使用,从而实现了快速的代理开发。

- 企业级安全性和可扩展性——Amazon Bedrock AgentCore Runtime为每个用户会话提供完全的会话隔离,其中每个会话都使用隔离的CPU、内存和文件系统资源运行。这创建了用户会话之间的完全分离,保护了有状态的代理推理过程,并有助于防止跨会话数据污染。该服务可以在几秒钟内扩展到数千个代理会话,而开发人员只需为实际使用付费,这使其成为需要以不同使用模式支持大量学生的教育机构的理想选择。

- 内置AWS资源集成——已经在AWS上运行基础设施的组织可以将其现有资源(数据库、数据湖、Lambda函数和应用程序)无缝连接到Strands Agent,而无需复杂的集成或数据移动。代理可以通过LibreChat界面直接访问和展示见解,将现有的AWS投资转化为智能的对话体验,例如查询亚马逊关系数据库服务(Amazon RDS)数据库、分析亚马逊简单存储服务(Amazon S3)中的数据,或与现有的微服务集成。

- 经济高效的代理计算——通过将LibreChat的高效架构与Amazon Bedrock AgentCore按使用付费模式结合使用,组织可以部署复杂的代理应用程序,而不会产生通常与企业AI系统相关的高昂固定成本。用户只需为实际的代理计算和工具使用付费。

Agent use cases in higher education settings

The integration of LibreChat with Strands Agents enables numerous educational applications that demonstrate the solution’s versatility and power:

- A course recommendation agent can analyze a student’s academic history, current enrollment, and career interests to suggest relevant courses. By integrating with the student information system, the agent can make sure recommendations consider prerequisites, schedule conflicts, and graduation requirements.

- A degree progress tracking agent can interact with students and help them understand their specific degree requirements and provide guidance on remaining coursework, elective options, and timeline optimization.

- Agents can be configured with access to academic databases and institutional repositories, helping students and faculty discover relevant research papers and resources, providing guidance on academic writing, citation formats, and research methodology specific to different disciplines.

- Agents can handle routine student inquiries about registration, deadlines, and campus resources, freeing up staff time for more complex student support needs.

Refer to the following GitHub repo for Strands Agent code examples for educational use cases.

Solution overview

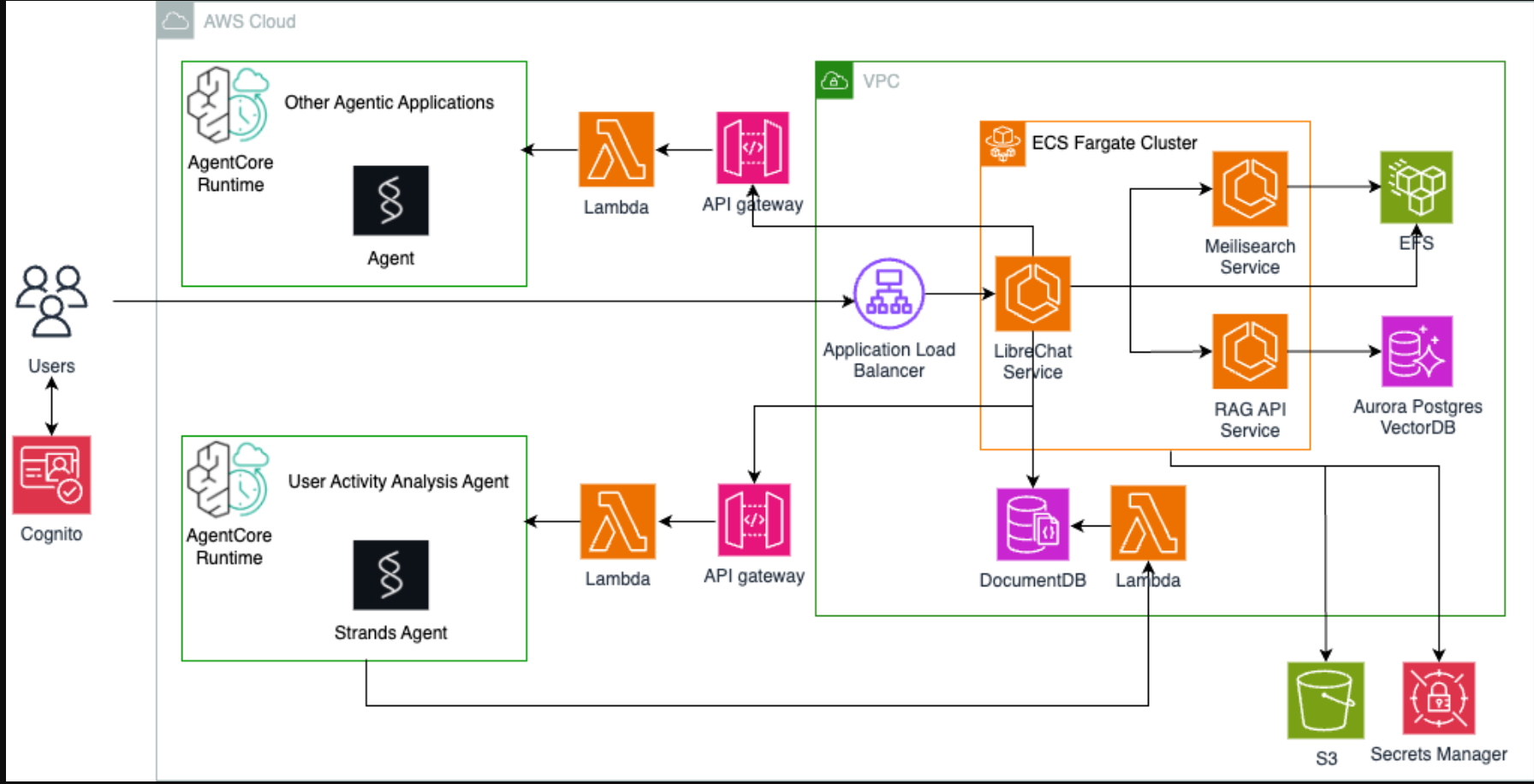

The following architecture diagram illustrates the overall system design for deploying LibreChat with Strands Agents integration. Strands Agents is deployed using Amazon Bedrock AgentCore Runtime, a secure, serverless runtime purpose-built for deploying and scaling dynamic AI agents and tools using an open source framework including Strands Agents.

The solution architecture includes several key components:

- LibreChat core services – The core chat interface runs in an Amazon Elastic Container Service (Amazon ECS) with AWS Fargate cluster, including LibreChat for the user-facing experience, Meilisearch for enhanced search capabilities, and Retrieval Augmented Generation (RAG) API services for document retrieval.

- LibreChat supporting infrastructure – This solution uses Amazon Elastic File System (Amazon EFS) for storing Meilisearch’s indexes and user uploaded files; Amazon Aurora PostgreSQL-Compatible Edition for vector database used by the RAG API; Amazon S3 for storing LibreChat configurations; Amazon DocumentDB for user, session, conversation data management; and AWS Secrets Manager for managing access to the resources.

- Strands Agents integration – This solution integrates Strands Agents (hosted by Amazon Bedrock AgentCore Runtime) with LibreChat through custom endpoints using Lambda and Amazon API Gateway. This integration pattern enables dynamic loading of agents in LibreChat for advanced generative AI capabilities. In particularly, the solution showcases a user activity analysis agent that draws insights from LibreChat logs.

- Authentication and security – The integration between LibreChat and Strands Agents implements a multi-layered authentication approach that maintains security without compromising user experience or administrative simplicity. When a student or faculty member selects a Strands Agent from LibreChat’s interface, the authentication flow operates seamlessly in the background through several coordinated layers:

- User authentication – LibreChat handles user login through your institution’s existing authentication system, with comprehensive options including OAuth, LDAP/AD, or local accounts as detailed in the LibreChat authentication documentation.

- API Gateway security – After users are authenticated to LibreChat, the system automatically handles API Gateway security by authenticating each request using preconfigured API keys.

- Service-to-service authentication – The underlying Lambda function uses AWS Identity and Access Management (IAM) roles to securely invoke Amazon Bedrock AgentCore Runtime where the Strands Agent is deployed.

- Resource access control – Strands Agents operate within defined permissions to access only authorized resources.

Deployment process

This solution uses the AWS Cloud Development Kit (AWS CDK) and AWS CloudFormation to handle the deployment through several automated phases. We will use a log analysis agent as an example to demonstrate the deployment process. The agent makes it possible for the admin to perform LibreChat log analysis through natural language queries.

LibreChat is deployed as a containerized service with ECS Fargate clusters and is integrated with supporting services, including virtual private cloud (VPC) networking, Application Load Balancer (ALB), and the complete data layer with Aurora PostgreSQL-Compatible, DocumentDB, Amazon EFS, and Amazon S3 storage. Security is built in with appropriate IAM roles, security groups, and secrets management.

The user activity analysis agent provides valuable insights into how students interact with AI tools, identifying peak usage times, popular topics, and potential areas where students might need additional support. The agent is automatically provisioned using the following CloudFormation template, which deploys Strands Agents using Amazon Bedrock AgentCore Runtime, provisions a Lambda function that invokes the agent, API Gateway to make the agent a URL endpoint, and a second Lambda function that accesses LibreChat logs stored in DocumentDB. The second Lambda is used as a tool of the agent.

The following code shows how to configure LibreChat to make the agent a custom endpoint:

After the stack is deployed successfully, you can log in to LibreChat, select the agent, and start chatting. The following screenshot shows an example question that the user activity analysis agent can help answer, where it reads the LibreChat user activities from DocumentDB and generates an answer.

Deployment considerations and best practices

When deploying this LibreChat and Strands Agents integration, organizations should carefully consider several key factors that can significantly impact both the success of the implementation and its long-term sustainability.

Security and compliance form the foundation of any successful deployment, particularly in educational environments where data protection is paramount. Organizations must implement robust data classification schemes to maintain appropriate handling of sensitive information, and role-based access controls make sure users only access AI capabilities and data appropriate to their roles. Beyond traditional perimeter security, a layered authorization approach becomes critical when deploying AI systems that might access multiple data sources with varying sensitivity levels. This involves implementing multiple authorization checks throughout the application stack, including service-to-service authorization, trusted identity propagation that carries the end-user’s identity through the system components, and granular access controls that evaluate permissions at each data access point rather than relying solely on broad service-level permissions. Such layered security architectures help mitigate risks like prompt injection vulnerabilities and unauthorized cross-tenant data access, making sure that even if one security layer is compromised, additional controls remain in place to protect sensitive educational data. Regular compliance monitoring becomes essential, with automated audits and checks maintaining continued adherence to relevant data protection regulations throughout the system’s lifecycle, while also validating that layered authorization policies remain effective as the system evolves.

Cost management requires a strategic approach that balances functionality with financial sustainability. Organizations must prioritize their generative AI spending based on business impact and criticality while maintaining cost transparency across customer and user segments. Implementing comprehensive usage monitoring helps organizations track AI service consumption patterns and identify optimization opportunities before costs become problematic. The human element of deployment often proves more challenging than the technical implementation. Faculty training programs should provide comprehensive guidance on integrating AI tools into teaching practices, focusing not just on how to use the tools but how to use them effectively for educational outcomes. Student onboarding requires clear guidelines and tutorials that promote both effective AI interaction and academic integrity. Perhaps most importantly, establishing continuous feedback loops makes sure the system evolves based on actual user experiences and measured educational outcomes rather than assumptions about what users need.Successful deployments also require careful attention to the dynamic nature of AI technology. The architecture’s support for dynamic agent loading enables organizations to add specialized agents for new departments or use cases without disrupting existing services. Version control systems should maintain different agent versions for testing and gradual rollout of improvements, and performance monitoring tracks both technical metrics and user satisfaction to guide continuous improvement efforts.

Conclusion

The integration of LibreChat with Strands Agents represents a significant step forward in democratizing access to advanced AI capabilities in higher education. By combining the accessibility and customization of open source systems with the sophistication and reliability of enterprise-grade AI services, institutions can provide students and faculty with powerful tools that enhance learning, research, and academic success.This architecture demonstrates that educational institutions don’t need to choose between powerful AI capabilities and institutional control. Instead, they can take advantage of the innovation and flexibility of open source solutions with the scalability and reliability of cloud-based AI services. The integration example showcased in this post illustrates the solution’s versatility and potential for customization as institutions expand and adapt the solution to meet evolving educational needs.

For future work, the LibreChat system’s Model Context Protocol (MCP) server integration capabilities offer exciting possibilities for enhanced agent architectures. A particularly promising avenue involves wrapping agents as MCP servers, transforming them into standardized tools that can be seamlessly integrated alongside other MCP-enabled agents. This approach would enable educators to compose sophisticated multi-agent workflows, creating highly personalized educational experiences tailored to individual learning styles.

The future of education is about having the right AI tools, properly integrated and ethically deployed, to enhance human learning and achievement through flexible, interoperable, and extensible solutions that can evolve with educational needs.

- 登录 发表评论

- 20 次浏览